|

I have been a Postdoctoral research fellow at the AImageLab research laboratory, where I worked on Computer Vision and Deep Learning, with a focus on robotic visual navigation. I've spent a period as Visiting Student Researcher at Stanford University in the Autonomous Systems Lab (ASL) under Prof. Marco Pavone. I did my PhD at University of Modena and Reggio Emilia advised by Prof. Rita Cucchiara, while I pursued my Bachelor's and Master's degrees at Polytechnic University of Milan with an exchange period at Technische Universität Wien. I am a IEEE (Institute of Electrical and Electronics Engineers) and a CVF (The Computer Vision Foundation) member. |

|

Research

|

Roberto Bigazzi Ph.D. Thesis, 2023 pdf / cover / bibtex In this thesis, I present the research work carried out during my Ph.D. that was focused on the challenges defined by the field of Embodied Artificial Intelligence. I would like to thank again my family, friends, and supervisor for the support during the last three years. |

|

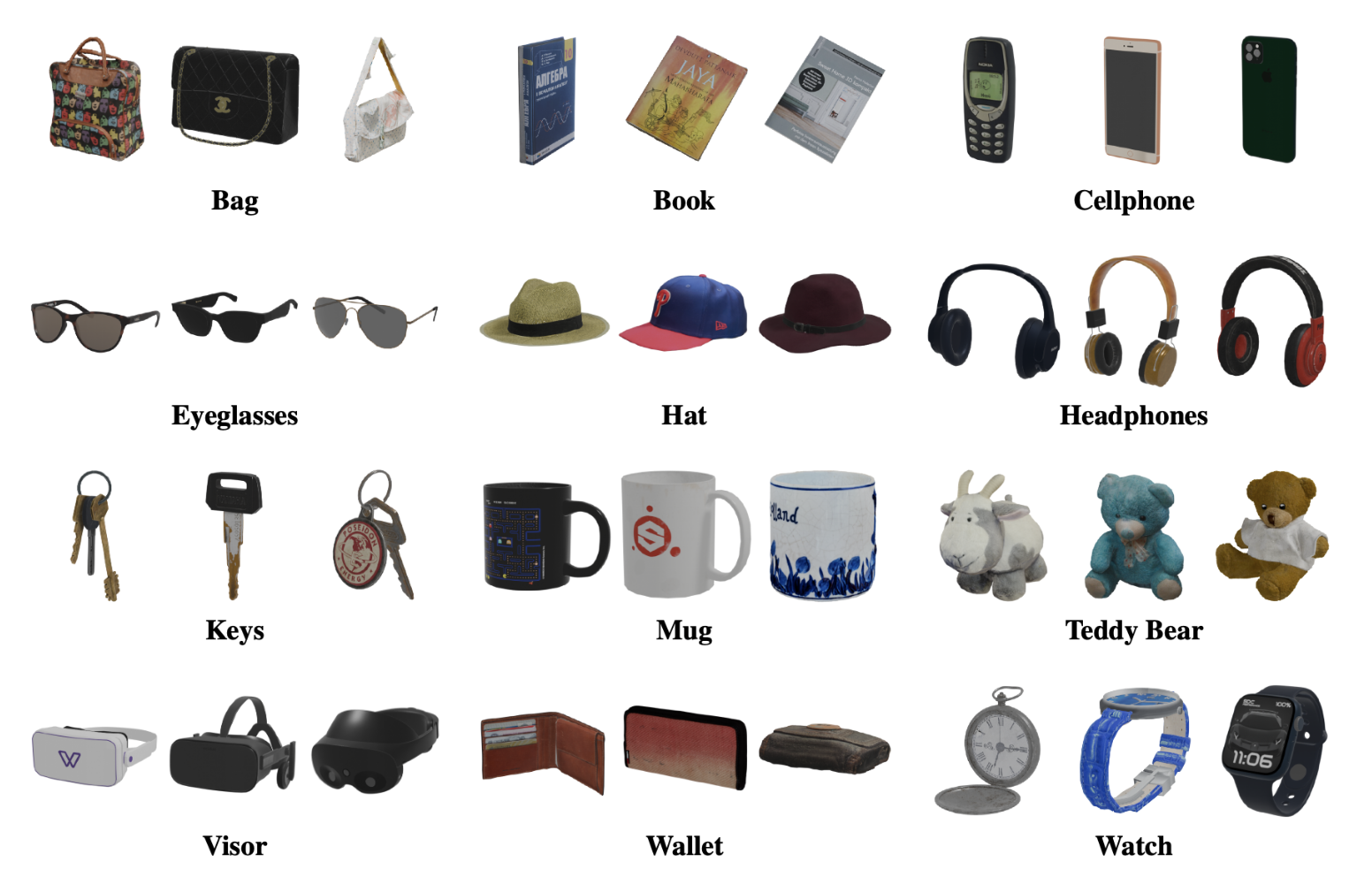

Luca Barsellotti, Roberto Bigazzi, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara, NeurIPS, 2024 arXiv / website / code / bibtex We propose a new task denominated Personalized Instance-based Navigation (PIN), in which an embodied agent is tasked with locating and reaching a specific personal object by distinguishing it among multiple instances of the same category. The task is accompanied by PInNED, a dedicated new dataset composed of photo-realistic scenes augmented with additional 3D objects. |

|

Niyati Rawal, Roberto Bigazzi, Lorenzo Baraldi, Rita Cucchiara, CVPRW, 2024 arXiv / bibtex We propose a novel architecture inspired by Generative Adversarial Networks (GANs) that produces meaningful and well-formed synthetic instructions to improve navigation agents’ performance. The validation analysis of our proposal on REVERIE and R2R highlights the promising aspects of our proposal, achieving state-of-the-art results. |

|

|

Roberto Bigazzi, Lorenzo Baraldi, Shreyas Kousik, Rita Cucchiara, Marco Pavone ICRA, 2024 (Collaboration with Stanford University and Georgia Tech) arXiv / pdf / bibtex We propose a novel approach that combines visual and fine-tuned CLIP features to generate grounded language-visual features for mapping. These region classification features are seamlessly integrated into a global grid map using an exploration-based navigation policy. |

|

|

Roberto Bigazzi, Marcella Cornia, Silvia Cascianelli, Lorenzo Baraldi, Rita Cucchiara ICRA, 2023 arXiv / bibtex We propose and evaluate an approach that combines recent advances in visual robotic exploration and image captioning on images generated through agent-environment interaction. Our approach can generate smart scene descriptions that maximize semantic knowledge of the environment and avoid repetitions. |

|

Samuele Poppi, Roberto Bigazzi, Niyati Rawal, Marcella Cornia, Silvia Cascianelli, Lorenzo Baraldi, Rita Cucchiara ICIAP, 2023 (Honorable Mention ICIAP Best Paper Award) pdf / bibtex We use explainable maps to visualize model predictions and highlight the correlation between observed entities and words generated by a captioning model during the embodied exploration. |

|

Federico Landi, Roberto Bigazzi, Silvia Cascianelli, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara ICPR, 2022 arXiv / website / code / bibtex We propose Spot the Difference: a novel task for Embodied AI where the agent has access to an outdated map of the environment and needs to recover the correct layout in a fixed time budget. |

|

|

Roberto Bigazzi, Federico Landi, Silvia Cascianelli, Lorenzo Baraldi, Marcella Cornia, Rita Cucchiara RA-L/ICRA, 2022 arXiv / code / bibtex We propose to train a navigation model with a purely intrinsic reward signal to guide exploration, which is based on the impact of the robot's actions on its internal representation of the environment. |

|

|

Roberto Bigazzi, Federico Landi, Silvia Cascianelli, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara ICIAP, 2021 arXiv / website / code / bibtex We build and release a new 3D space for embodied navigation with unique characteristics: the one of a complete art museum. We name this environment ArtGallery3D (AG3D). |

|

Roberto Bigazzi, Federico Landi, Marcella Cornia, Silvia Cascianelli, Lorenzo Baraldi, Rita Cucchiara CAIP, 2021 arXiv / code / bibtex We detail how to transfer the knowledge acquired in simulation into the real world describing the architectural discrepancies that damage the Sim2Real adaptation ability of models trained on the Habitat simulator and we propose a novel solution tailored towards the deployment in real-world scenarios. |

|

Roberto Bigazzi, Federico Landi, Marcella Cornia, Silvia Cascianelli, Lorenzo Baraldi, Rita Cucchiara ICPR, 2020 (Oral Presentation) arXiv / bibtex We devise an embodied setting in which an agent needs to explore a previously unknown environment while recounting what it sees during the path. The agent needs to navigate the environment driven by an exploration goal, select proper moments for description, and output natural language descriptions of relevant objects and scenes. |

Reviewing CommitteesJournals:

Conferences:

Other:

|

Certificates and Memberships

|

Teaching Activities

|

Thesis Supervision

|

Courses and Summer Schools

|

International Conferences

|

|

For any issues with this page please let me know here |